Background

Our sales demo promised self-serve no-code custom AI model training where customers could go from policy to an AI model that would moderate content exactly as the policy writers or Trust & Safety leadership would.

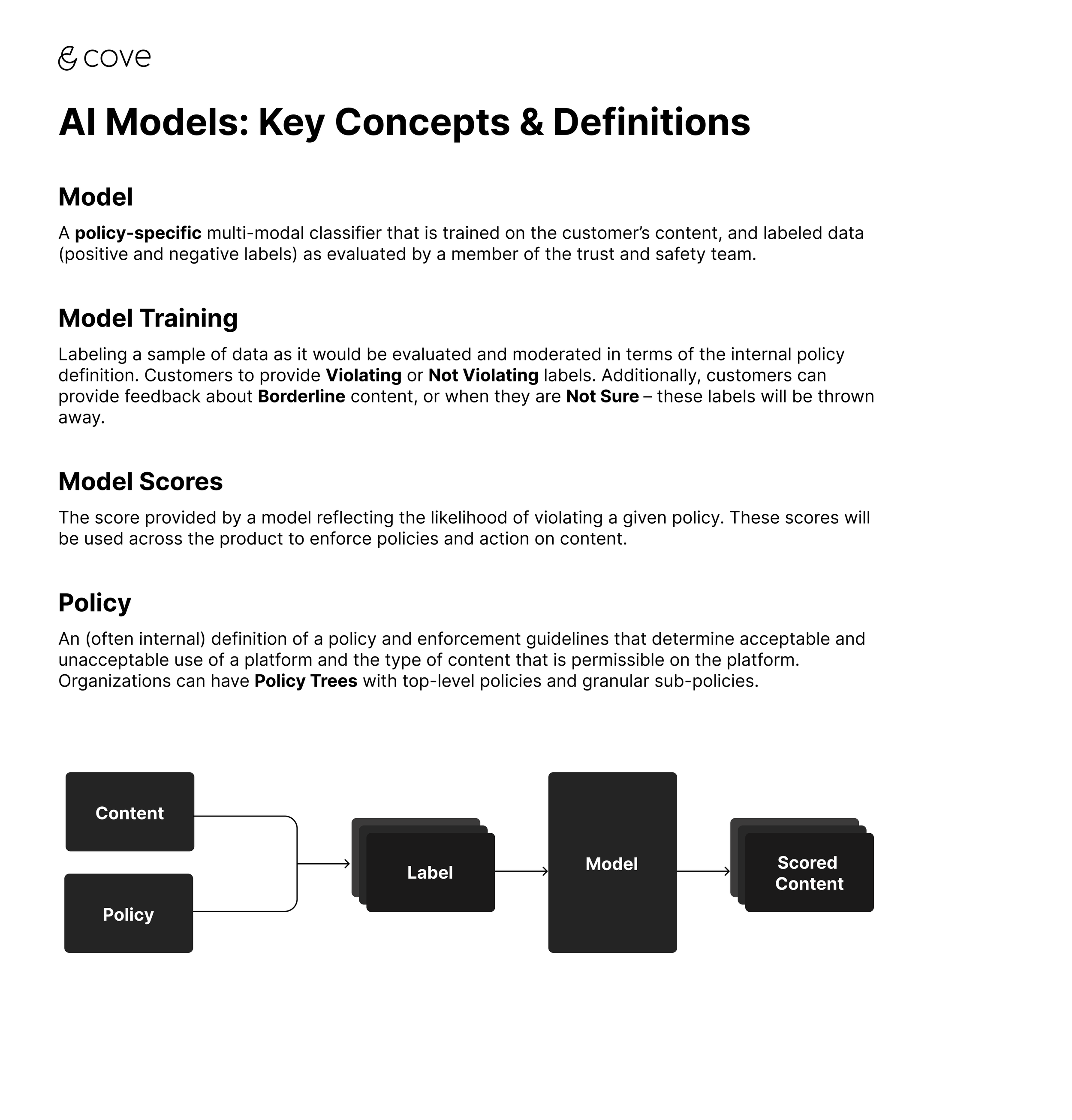

The demo included an input of a plain English text based policy with enforcement guidelines, an upload of a large sample dataset, and manual labeling exercise of a small set (~200) where someone from the customer’s organization would mark the content as violating or not violating.

The Challenge

The actual model training was entirely engineering dependent, not self serve

Customers lacked access to the model, as tooling around it was limited

The manual workflows and lack of access posed scalability limitations for both customers & Cove

The Vision

Effective and simplified no-code AI model training for Trust & Safety leaders.

Comprehensive tools & intuitive workflows to evaluate, enhance, and manage model performance & lifecycle.

Basics

Step 1: Make it work

Turn the demo into reality

Basics

Load data into the system.

Collect labels directly through the UI.

Transform the “AI Enabled” indicator into an entry point for model training.

Additional functionality needed to make it work in-product

Support policy changes and updates.

Multi-modal and complex items (e.g., listings).

Enable label collection for fields within complex items.

Add escape hatches for restarting labeling, discarding labels, or editing policies.

Introduce interruptions to flag excessive negative labels.

The final in-product model training flow

Though far from perfect, and just the stepping stone, this was the first working version of a self-serve model training flow on the Cove platform.

Explorations & final designs for in-product flow

Taking a step back

Once the promised demo flow was somewhat working in the product, I could take a step back and approach the problem as it should have been from the start, with a customer-first mindset.

The Audience

Trust & Safety teams – Policy Writers, Policy Directors, Heads of Trust and Safety, and Enforcement teams at organizations with a deep understanding of the policies, and moderation decisions

Limited AI experience – Most of these teams have never used AI tooling, much less directly interacting with models

Tech savvy; not technical – These teams often exist in the context of a tech, or tech-adjacent company and use collaboration and other new tooling like Notion, Slack, Figma, Google Docs internally. Some are familiar with experimentation platforms and deployment tools

Make it nice

Build a cohesive product experience

The Approach

Redesign the product with AI Models as a focal point and removing existing platform constraints (UX, IA, demo flow). Define where models live and how they integrate with platform-wide heuristics and concepts.

Help users build a mental model of their custom AI Model, and bridge it with

Internal concepts and frameworks (policies, enforcement, moderator decisions)

Cove-specific concepts (automated enforcement, moderator console, custom models)

Explore innovative ways to showcase custom AI models. Borrow concepts of versioning from other spaces e.g. deployment platforms, collaboration tools, document management, and more.

Exploring divergent and new ways to visualize a model

A new design system

Driven by the need to balance growth & scalability

To support products like Custom AI Models, User Penalty Systems, and Spam Rules, we introduced a new design system focused on:

Consistency: Standardized UI components, visual styles, and interaction patterns across the platform.

Efficiency: Streamlined workflows and simplified navigation to enhance core user experiences and design time.

Additionally, with the shift to a “choose what you use” pricing model, we reorganized the navigation to:

Clearly separate products for better usability.

Establish distinct branding for each product line.

A successful first model

The first step was focused on building customer confidence in the first AI model for a policy through:

Iterative refining and re-training

Threshold optimization and content estimation

Automation to utilize model scores

Safe testing on live data

The new model training flow on the platform

The Model Lifecycle

Operationalizing the key concepts post-training to build visualize and build confidence in the first model version

Model Usage on the platform

As customers build automations and rules based on model scores, a unified view of model usage across Cove was essential.

Key features included:

Display model thresholds for each rule.

Preview thresholds to see content types captured by each rule.

Edit, manage, or delete rules from this view.

Seamlessly switch rules to live after testing in the background.

Improving Models with Versions

Change Visibility

New model versions introduced unique advantages and drawbacks, but thresholds no longer applied consistently across versions.

To address this, we enabled new views to sort by new and live version scores, and surface the controversial samples (biggest deltas in score).

These tools helped users easily identify scoring differences, revise thresholds, and confidently evaluate new models.

Upgrading Models

Clearly communicate the current model's usage and the downstream impacts of upgrading to the newly trained version through.

Duplicating automations from the old model to maintain threshold adjustments.

Allowing safe testing of the new model without impacting live content.

Enabling easy removal outdated rules no longer needed with the new model’s capabilities.

Providing clear visibility into rule thresholds and statuses.

Early learnings & feedback

Created a POC environment with limited capabilities to test these new features

Opted some customers into the new experience to play around with their existing in-use first models

Saw significant usage and engagement on the new testing and safety features i.e. spot testing, model comparison, scored samples

POC users engaged well in the model refinement flows as they were not given the self-serve training flow, and was a great way to get feedback